Why You Need Real User Monitoring to Really Understand Your Web Performance

Synthetic testing shows perfect scores, but users complain your site is slow. Real User Monitoring reveals the gap between lab performance and real-world experience for actual users.

Great Lighthouse scores, but your site is still slow. Sound familiar?

You’ve run PageSpeed Insights, Request Metrics, and every other synthetic test you can find. Your scores look great. But your analytics shows users bouncing, conversions dropping, and complaints about “slow pages.” What’s going on?

The answer is simple: synthetic testing only tells you how your site performs in a test, not how it performs for real users in the real world. And the gap between lab performance and real-world performance is huge.

Here’s the shocking truth: Google’s own research found that nearly 50% of websites with perfect Lighthouse scores still fail Core Web Vitals when measured with real user data. Half! That means half of the developers celebrating their perfect scores are still delivering poor performance to their users.

To understand how your website really performs—you need Real User Monitoring (RUM).

What Real User Monitoring Actually Tells You (That Lighthouse Can’t)

Real User Monitoring does exactly what the name suggests: it monitors real users as they interact with your website. Instead of simulating user conditions in a test environment, RUM collects performance data from actual user sessions as they happen.

When a real person visits your site on their actual device, using their actual network connection, RUM captures their experience. Did the page load quickly? Did they experience lag when clicking buttons? How long did it take for the page to become interactive? RUM answers these questions with data from real usage, not simulated conditions.

The key difference is test conditions versus real-world chaos. Synthetic testing runs in perfect, controlled environments. Real User Monitoring captures the messy, unpredictable reality of how people actually use your website.

How Real User Monitoring Works

RUM works by adding a small piece of JavaScript to your website that runs alongside your normal page content. This JavaScript agent uses built-in browser APIs to collect performance data as users interact with your site.

When someone visits your page, the RUM script automatically measures things like:

- How long it took for the page to load

- When the page became interactive

- How long animations and interactions took to complete

- Any errors or slowdowns that occurred

The browser itself provides most of this data through APIs like the Navigation Timing API and Performance Observer. The RUM script simply collects these measurements and sends them to a reporting service where you can analyze the data.

This approach gives you performance data that reflects real network conditions, real devices, real user behavior patterns, and real-world usage scenarios—something synthetic testing simply cannot provide.

For a detailed technical comparison between synthetic and real user approaches, check out our guide on Synthetic Testing and Real User Monitoring.

Real User Monitoring vs Synthetic Monitoring: What You’re Missing

We’ve written extensively about the limitations of Lighthouse, but it’s worth highlighting the key blind spots that affect every synthetic testing tool.

Lighthouse tries to simulate an “85th percentile user”—but their simulation is usually wrong. Your users aren’t Google’s generic average. A developer-focused B2B tool has completely different users than a consumer social app. Lighthouse can’t know the difference, so it makes assumptions that are often completely wrong for your specific audience.

Consider Google’s own Gmail, which scores terribly on Lighthouse despite being one of the snappiest web applications ever built. Lighthouse doesn’t understand that Gmail’s initial load time is irrelevant because users keep it open all day and interact with a fast, responsive interface. The performance that matters happens after the initial load—something synthetic testing completely misses.

Real-world variables that synthetic tests can’t simulate:

- Network conditions beyond simple throttling (packet loss, latency spikes, congestion)

- Device thermal throttling during extended usage sessions

- Background apps competing for resources

- Browser extensions affecting performance

- User behavior patterns (scrolling, multitasking, multiple tabs)

- Geographic network infrastructure differences

- Time-of-day performance variations

- Third-party vendor performance and outages affecting your site

Get your free speed check to see your website performance for real users.

Free speed test

Why Chrome User Experience Report (CrUX) is Just “RUM Lite”

The Chrome User Experience Report provides real user data, which sounds great—but it’s like the lite beer of Real User Monitoring. Sure, it’s better than nothing if you have a lot of it, but it lacks the substance you need to make real decisions.

CrUX does show data from your specific website, but only for your busiest pages and only as total aggregate performance. It’s like getting a report that says “your restaurant had mixed reviews this month” without knowing which dishes, which servers, or which times of day caused problems. You know something might be off, but you can’t do anything about it.

The 28-day reporting delay means you could have performance problems for weeks before CrUX shows them. In web time, that’s an eternity. Your users could be suffering through slow experiences while you’re celebrating last month’s good CrUX scores.

CrUX data is public, which makes it excellent for benchmarking against competitors. You can see how your site’s Core Web Vitals compare to others in your industry. But that same public nature means it’s not detailed enough for making optimization decisions about your specific site. You know there might be a problem, but not where, when, or why.

CrUX gives you the 30,000-foot view. Real User Monitoring gives you the ground-level intelligence you need to actually improve your site.

Now let’s look at the specific problems that only Real User Monitoring can catch.

Real User Monitoring Benefits: Problems Only RUM Can Catch

Here are the performance issues that synthetic testing will never reveal, but Real User Monitoring catches immediately:

Dynamic Content Performance Issues

Your website probably shows different content to different users. Personalized experiences, user-generated content, different product catalogs, A/B test variations—none of this complexity appears in synthetic testing.

RUM reveals how these dynamic elements affect real performance. Maybe your personalization engine is fast for new users but slows down for power users with complex histories. Maybe your A/B test is tanking performance for the variant group. Synthetic testing would never catch these issues because it can’t simulate your actual user diversity.

The Long Tail of Performance Problems

Every website has edge cases and unusual scenarios that affect real users but would never appear in synthetic testing:

- That specific Android phone model that struggles with your animations

- Users in geographic regions with different network infrastructure

- Performance degradation during high-traffic periods

- Third-party service failures affecting real users

- Progressive performance degradation over long user sessions

These “long tail” issues might affect a small percentage of users, but they often affect your most valuable users—the ones who engage deeply with your site.

Business-Critical Performance Scenarios

RUM shows you which performance problems actually hurt your bottom line:

Are slow pages costing you sales? RUM can tell you exactly which performance issues make users bounce before buying. Maybe your product pages load fine in testing but struggle with real product catalogs and user reviews.

Is mobile really slower than desktop? You might assume mobile users are more patient, but RUM shows you the reality. Maybe your mobile checkout flow is actually faster because it’s simpler, or maybe it’s a disaster because of third-party payment widgets.

What happens during your Black Friday traffic spike? Synthetic testing can’t simulate real load on your servers, payment processors, and CDN. RUM captures what actually happens when thousands of people try to buy your stuff at once.

How do your fancy search filters perform with real data? Testing with a clean database is one thing. RUM shows you how search and filtering work when users have thousands of products, complex queries, and messy real-world data.

Real User Performance Monitoring Tools in Action: What You Actually Get

When you install RUM, you get immediate access to performance intelligence that transforms how you think about your website:

What You Actually See When You Install RUM

When you install RUM, you immediately start seeing things that will surprise you. Maybe your mobile users aren’t actually slower—they’re just using your site differently. Maybe that page you thought was fast is actually driving people away because it’s slow on the devices your users actually have.

You’ll see which performance problems cost you real money. That checkout page that loads fine in testing? RUM might show you it’s actually slow for users with older phones, and those users abandon their carts. That’s actionable data you can’t get anywhere else.

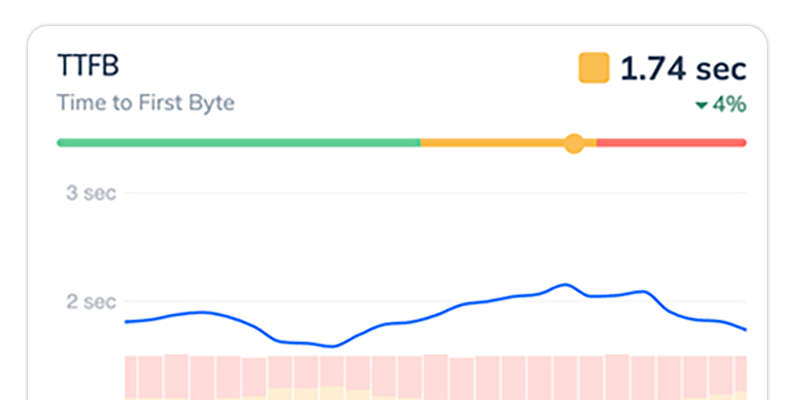

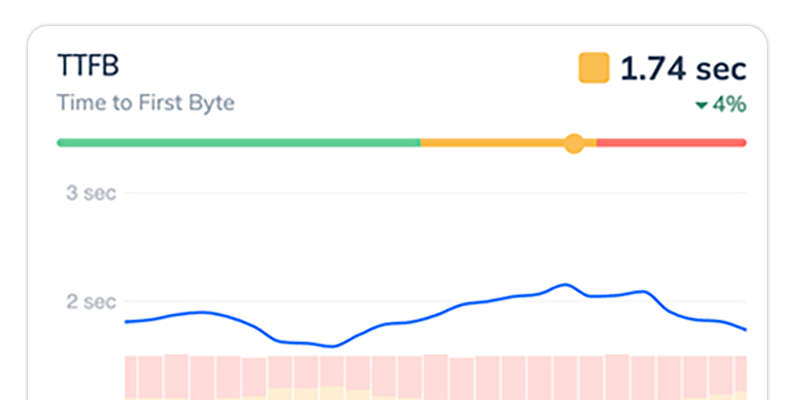

RUM Keeps Watching So You Don’t Have To

Unlike running Lighthouse once a week, RUM never stops monitoring. It’s like having someone constantly watching your site and tapping you on the shoulder when things go wrong.

You get alerts that actually matter. Not “your LCP went from 2.1 to 2.3 seconds,” but “your checkout page just got slow and people are bailing.” RUM can tell you when a code deploy made things worse, when a third-party service is dragging you down, or when traffic spikes are overwhelming your servers.

You see patterns over time. Maybe your site gets slower every Tuesday (server maintenance?). Maybe performance tanks during lunch hours when your team deploys. Maybe that new feature you shipped last month has been slowly degrading performance. RUM catches this stuff.

What You See When RUM Finds Problems

When Request Metrics RUM spots a problem, it doesn’t just say “something’s slow.” It tells you what’s slow, for whom, and when.

You see the user’s whole story. Maybe users bounce on your pricing page, but only after they’ve struggled through a slow product search. That’s a different problem than users bouncing immediately. RUM connects the dots between performance and user behavior.

You get real device and browser data. Not “simulated iPhone,” but “actual iPhone 12 on Verizon in Chicago.” You can see if your performance problems are specific to certain devices, browsers, or network conditions that your real users actually have.

You spot the weird edge cases. Like that one page that’s only slow for users who came from Google Ads, or the form that gets laggy after people have been on your site for more than 10 minutes. Synthetic testing would never catch this stuff.

Why You Need Both (But RUM Reveals What Actually Matters)

Don’t misunderstand—synthetic testing still has value. The complete performance strategy uses both approaches strategically.

Synthetic Testing: Good for Development and Investigation

As we covered in our Lighthouse limitations article, synthetic testing excels at:

- Catching obvious issues before release in a controlled environment

- Investigating specific problems once you know they exist (RUM tells you there’s a problem, Lighthouse helps you understand why)

- Consistent testing environment for A/B testing code changes during development

Think of synthetic testing as your development safety net. It prevents you from shipping obviously broken performance, but it can’t tell you how your site actually performs for real users.

RUM: Essential for Understanding Production Reality

Real User Monitoring is where you discover what actually needs fixing in production:

- Actual user experience measurement across all your user segments and conditions

- Business impact quantification showing which performance issues cost money and which don’t

- Real-world performance validation under the conditions that actually matter

- Continuous monitoring and alerting for production issues affecting real users

The Complete Performance Strategy

The most effective approach combines both tools strategically:

- Use synthetic tests during development to prevent shipping obvious performance problems

- Use RUM to discover what actually needs fixing in production with real users

- Cross-reference findings: when RUM shows a problem, investigate with synthetic testing to understand the root cause

- Validate fixes with RUM to ensure your improvements actually help real users

For a deeper technical comparison of when to use each approach, read our detailed guide on Synthetic Testing and Real User Monitoring.

How to Do Real User Monitoring: Beyond the Demo

If you’ve watched RUM demos but weren’t sure why you’d bother installing it, here’s what you can expect when you actually implement Real User Monitoring:

What to Expect When You Install RUM

First 24 hours: RUM establishes your performance baseline. You’ll start seeing data about how your site actually performs for real users, often revealing surprises. Maybe your mobile performance is worse than you thought, or maybe a specific page that seemed fast in testing is actually slow for real users.

First week: Patterns emerge. You’ll see which pages consistently have performance issues, which user segments struggle the most, and which times of day or days of the week show performance degradation. This is when RUM starts answering questions you didn’t even know you had.

First month: You can analyze trends and identify optimization opportunities based on real user impact. You’ll have enough data to prioritize performance improvements based on actual business impact rather than synthetic scores.

Ongoing: RUM becomes your continuous performance intelligence system, alerting you to problems as they happen and helping you measure the real-world impact of every change you make.

Implementation Best Practices

Key metrics to monitor from day one:

- Core Web Vitals (LCP, INP, CLS) from real users

- Page load times across different user segments

- Error rates and their correlation with performance issues

- Business metrics like conversion rates and bounce rates tied to performance data

Focus on trends, not arbitrary thresholds. Instead of getting alerts every time a metric crosses some magic number, track whether your performance is improving or getting worse over time. A gradual decline in performance is often more important than a single spike, and trending data helps you spot problems before they become disasters.

Avoiding common setup mistakes: Don’t try to track everything at once. Start with the metrics that matter most to your business, then expand your monitoring as you get comfortable with the data.

Best Real User Monitoring Tools: What to Look For

Not all RUM tools are created equal. When evaluating Real User Monitoring solutions, look for:

Essential RUM Features

Real-time data collection and alerting: You need to know about performance problems as they happen, not days or weeks later.

Core Web Vitals tracking: Your RUM tool should track the metrics that Google uses for ranking and that correlate with user experience.

User segment analysis: The ability to slice performance data by device type, geography, user behavior, and other dimensions that matter to your business.

Integration with synthetic testing: When RUM spots a performance problem, you can use synthetic testing tools to get actionable tips on how to make it faster.

Request Metrics Advantages

Setup that doesn’t suck. Most RUM tools make you configure a million settings and wait days for useful data. Request Metrics gives you insights within minutes of adding the script to your site.

Data you can actually use. Many RUM tools dump spreadsheets of metrics on you. Request Metrics focuses on showing you what’s broken and what to fix first, not overwhelming you with numbers.

Alerts that matter. Get notified when performance issues actually affect your users and your business, not every time a metric fluctuates by 50 milliseconds.

Connects to what you care about. See how performance impacts your conversions, not just abstract scores that don’t relate to your business goals.

Your Next Step

Ready to see how your website actually performs for real users? Install Request Metrics Real User Monitoring and start getting insights about your actual user experience within minutes.

In your first week with RUM, you’ll likely discover performance issues you never knew existed and optimization opportunities that can directly impact your business metrics. More importantly, you’ll finally have the data you need to make performance improvements that actually matter to your users.

Stop guessing about your website’s performance. Start measuring what really matters with Real User Monitoring.